AI in Society: A Future We Should Try to Change?

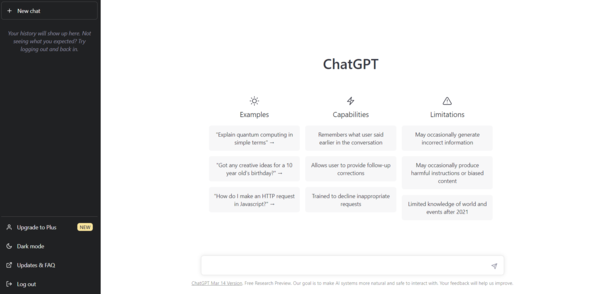

It used to be that only tech companies and academics had access to sophisticated AI. However, in November 2022, OpenAI released ChatGPT and ushered in a new era of AI — an era wherein the general public can easily access powerful artificial minds. Not even half a year past that, GPT-4, an improved version of the language model system that powered ChatGPT, was also made accessible to everyone by OpenAI. With AI becoming a tangible reality for everyone, this month’s Debate discusses whether or not we should resist the impending reign of AI.

Throughout history, only a handful of inventions truly changed the world. Similar to how the printing press accelerated the Renaissance and how the steam engine brought about the industrial revolution, AI has arrived to define a new era of humanity. Despite contentions against AI, the fact of the matter is that it will inevitably become an integral part of society, which is why it is extremely important that we accept AI sooner rather than later. Proper regulation of its use will play a key role in ensuring that AI becomes a crucial asset for humanity.

The past year has been a critical time period for the integration of AI into society; millions of people got to experience firsthand the seemingly limitless potential of AI when ChatGPT was released to the general public. Indeed, ChatGPT spread like wildfire, reaching one million users in just five days — for comparison, it took Instagram two months to do so. It is evident that people are fascinated by ChatGPT, and how could they not be? ChatGPT appears to be the ultimate assistant — more knowledgeable in all fields than any human can ever dream of being. Moreover, AI has done work for mainstream media for several years now. The Associated Press has been working with Automated Insights to publish sports articles since 2016. ESPN, arguably the most popular news channel in the entire world, has also gone in the same direction.

However, there is a valid concern that powerful AI like ChatGPT will bring about countless problems for the media and academia at the very least. In response, the creators of ChatGPT themselves, OpenAI, have developed a GPT-classifier, a tool that can be used to detect works made by ChatGPT or other GPT tools. Unfortunately, as of now, this GPT-classifier is still unreliable, deeming AI-written text correctly only 26% of the time while falsely identifying human-written text as AI-written 9% of the time. Granted that, safety measures like GPT-classifiers that are necessary to keep the use of powerful AI in check will only be made and improved faster and faster once AI is embraced by society.

Another creation of OpenAI that piqued public interest the past year is DALL·E, a system that can create scarily accurate images based on natural language prompts alone. However, despite how impressive that is, there has been much debate about the ethics of AI image generators. Currently, there are no regulations for AI training data so the works of artists were easily used without their consent. In response to that, a class-action lawsuit has been filed against Stability AI and Midjourney, companies behind famous AI image generators, on the grounds of copyright infringement. One possible outcome that can allow AI art to coexist with traditional artists is that artists are compensated accordingly if their work is used to train AI with their consent. This situation further highlights why accepting AI into society as soon as possible is important — necessary regulations that determine what can be used as training data and how artists are compensated can only be set in place after then.

It cannot be denied that some forms of AI, like deepfakes, can be a dangerous weapon used to harm people and disrupt society. However, unwisely generalizing all AI as evil will only allow such harmful systems to exist for longer, not to mention that we are going to miss out on an unimaginable amount of untapped potential. Solutions to ethical dilemmas about the use of helpful AI will only be delayed if time and effort is pointlessly spent arguing whether or not these AI systems should have existed in the first place. For AI to do good, people have to accept the fact that AI has come and is here to stay.