As hate speech and misinformation online increase dramatically, social media platforms are censoring their content more than ever. While some support this trend towards stricter guidelines for online content, some are concerned with the greater problems censorship may bring. What should the future of social media be?

Social media was initially designed as a platform where users can create and share content. But in recent years, it seems to be losing its direction, purpose, and its very identity. While the need for some change in these platforms is understandable, increasing censorship is the wrong solution to the right problem — a wrong solution that will create greater problems that need not be faced.

Putting free speech aside, censorship is an ineffective solution in the first place. A study from Nature magazine on the “global hate ecology” claimed that censorship will only worsen hate speech by creating “global dark pools in which online hate will flourish.” Angered by the censorship in mainstream social media sites such as Twitter and Facebook, many conservatives in the US switched to alternate platforms such as Parler, which welcomed 3.5 million new users in just one week. Regardless of one’s stance in US politics, this is evidence that policing content is ineffective as there will always be another platform with fewer regulations. Such a shift in user demographics creates another problem. As particular groups of users move to alternate platforms, soon there will only be people with similar viewpoints on each site. Social media, which should foster the exchange of diverse ideas, will only fuel the beliefs that people already hold, and its very identity will be challenged.

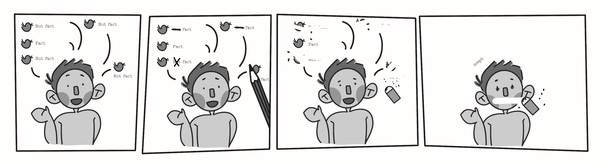

Then, there is the question of free speech and bias. Mark Zuckerberg stressed that he does not want Facebook to become the “arbiters of truth”. The current methods of censorship — such as deprioritizing certain posts, fact-checking, and outright bans — not only limit one’s freedom of expression, but also fall into the trap of being inherently biased and political. This October, after the New York Post published an article critical of Hunter Biden, Joe Biden’s son, Twitter disallowed its users from sharing the story and temporarily banned the New York Post Twitter account. Twitter later explained that the Post’s article violated its policies of hacked materials, and after receiving backlash, clarified that the article violated its rules on private information. While the validity of the Post’s article was later questioned by other media, Twitter’s choice of censoring the article was also questioned. Fact-checking and censoring content is one thing, but choosing what to fact-check and censor is another. As it is impossible to monitor all content online to the same degree, there will always be discrepancies in what content is censored and what is not. This is why social media platforms cannot be “arbiters of truth” — arbiters are bound to have their own agendas and biases.

It is not to say that all content online should be free of regulation, nor that misinformation and hate speech can be ignored. However, solutions to misinformation and online hate must be creative measures that respect the users and the fundamental identity of social media. For instance, anti-vaccine misinformation is so prevalent as it thrives in a “data void”. The void is created as there is already an academic consensus regarding vaccines and correct information is not actively being campaigned anymore. Rather than using censorship, social media platforms can address the data void by improving their algorithms that display content to users. The algorithms can also be user-specific, giving users some freedom to alter what content they can see. Of course, such customizations should beware of trapping users in their own filter bubble, maintaining the diversity and openness of social media.

The current laws of many countries, including the US, do not hold big tech companies to the same standards as the government regarding free speech and censorship. Many pressure social media platforms to increase their censorship, arguing that it is time for companies to take greater responsibility for the content online. But the current trend of increasing censorship suggests that big tech companies are taking a deeply problematic shortcut to a façade of responsibility. It is time for social media to be creative and become the pioneer it once was while preserving its identity as a place of active discourse.